Researchers Introduce New Algorithm Substantially Reduce Machine Learning Time

Date:06-03-2020 | 【Print】 【close】

Researchers from Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences, introduced a simple DRL (deep reinforcement learning) algorithm with m-out-of-n bootstrap technique and aggregated multiple DDPG (Deep deterministic policy gradient algorithm) structures, named it as BAMDDPG (bootstrapped aggregated multi-DDPG), successfully accelerated the training process and increased the performance in the area of intelligent artificial research.

The research team led by Prof. LI Huiyun, have tested their algorithm on 2D robot and open racing car simulator (TORCS), according to their paper “Deep Ensemble Reinforcement Learning with Multiple Deep Deterministic Policy Gradient Algorithm” published in Hindawi, the experiment results on the 2D robot arm game showed that the reward gained by the aggregated policy is 10%–50% better than those gained by subpolicies, and experiment results on the TORCS demonstrated that the new algorithm can learn successful control policies with less training time by 56.7%.

DDPG algorithm operating over continuous space of actions has attracted great attention for reinforcement learning. However, the exploration strategy through dynamic programming within the Bayesian belief state space is rather inefficient even for simple systems. This usually results in failure of the standard bootstrap when learning an optimal policy.

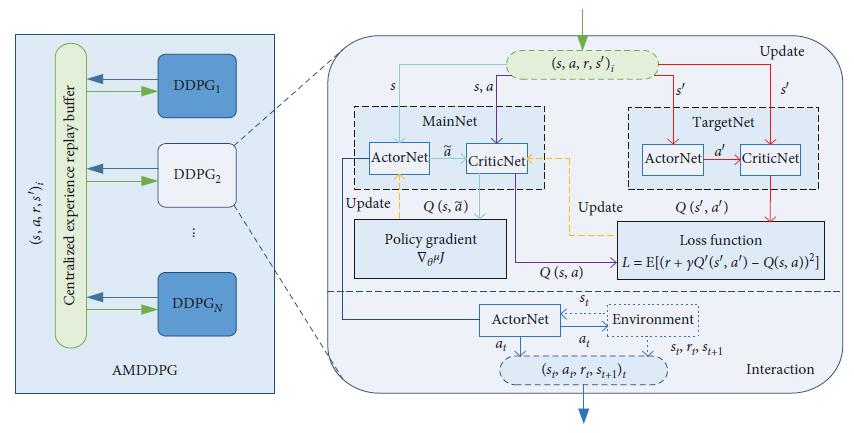

In consideration of the above shortcomings, the proposed algorithm uses the centralized experience replay buffer to improve the exploration efficiency. Since m-out-of-n bootstrap with random initialization produces reasonable uncertainty estimates at low computational cost, this helped in the convergence of the training. The proposed bootstrapped and aggregated DDPG can substantially reduce the learning time.

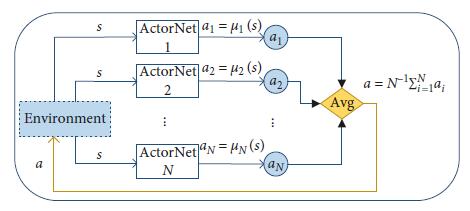

The structure of multi-DDPG with the centralized experience replay buffer is shown in Figure1, and Figure2 demonstrated that BAMDDPG averages all action outputs of trained subpolicies to achieve the final aggregated policy.

BAMDDPG enables each agent to use experiences encountered by other agents. This makes the training of subpolicies of BAMDDPG more efficient since each agent owns a wider vision and more environment information.

This method is effective to the sequential and iterative training data, where the data exhibited long-tailed distribution, rather than the norm distribution implicated by the i.i.d. (independent identically distributed) data assumption. The method can learn the optimal policies with much less training time for tasks with continuous space of actions and states.

Figure1. Structure of BAMDDPG. (Image by Prof. LI Huiyun)

Figure2. Aggregation of subpolicies. (Image by Prof. LI Huiyun)

CONTACT:

ZHANG Xiaomin

Email: xm.zhang@siat.ac.cn

Tel: 86-755-86585299